Artificial Intelligence: Transparency

The AIA proposal, whose material scope is AI systems, laid down a concept of transparency that differs from the same term laid down in GDPR, whose material scope is not about systems but processing of personal data. Both transparency terms involve different actors and different information intended to different recipients. The Transparency in terms of the AIA proposal is information that goes mainly from AI providers of AI systems to AI users/deployers. When AI systems are included or are means of a personal data processing, controllers should get enough information about them to fulfill their different obligations regarding the GDPR: to implement transparency and the exercise of rights, to fulfil the principle of accountability, to fulfil the requirements by the GDPR Supervisory Authorities regarding their investigative powers, and the same for the certification and code of conduct monitoring bodies.

The GDPR’s principle of “transparency” is laid down in Article 5(1)(a), explained in Recitals 39, and 58 to 62, and is detailed in Article 12 and following. The application of the principle of transparency of the GDPR (let’s say Transparency-GDPR) is an obligation imposed on data controllers to inform the data subjects about the impact of their data processing.

On the other side, “transparency” is a term also used regarding Artificial Intelligence (AI). For example, in the proposed AI-Act (AIA) is laid down some duties of transparency for certain AI systems. In Article (4a)(1)(d) (proposed by the Parliament) is stated that “‘transparency’ means that AI systems shall be developed and used in a way that allows appropriate traceability and explainability, while making humans aware that they communicate or interact with an AI system as well as duly informing users of the capabilities and limitations of that AI system and affected persons about their rights;” In Article 13 titled “Transparency and provision of information to users”, in the (1) paragraph is stated that “High-risk AI systems shall be designed and developed in such a way to ensure that their operation is sufficiently transparent with a view to achieving compliance with the relevant obligations of the user and of the provider set out in Chapter 3 of this Title and enabling users to understand and use the system appropriately”, and in (2) paragraph “shall be accompanied by instructions for use in an appropriate digital format or otherwise that include concise, complete, correct and clear information that is relevant, accessible and comprehensible to users”. In Article 52(1) it is also mentioned that “Providers shall ensure that AI systems intended to interact with natural persons are designed and developed in such a way that natural persons are informed that they are interacting with an AI system”.

The obligations regarding with transparency of the IA systems should be implemented by design and along their life cycle independently if the process personal data. Therefore, the term “transparency” in AIA (Transparency-AIA) is used with a different meaning than Transparency-GDPR.

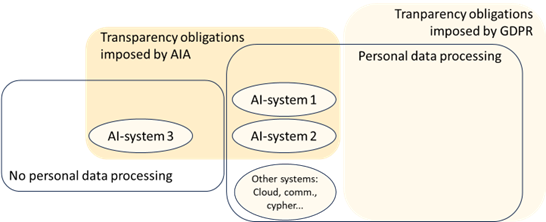

Transparency-AIA applies to AI systems, while Transparency-GDPR applies to personal data processing activities as defined in Article 2 and Article 4(2) of GDPR. An AI system could be one of the means used to implement one or more personal data processing activities. Usually, a personal data processing will be implemented over several kind of systems like Cloud systems, communication systems, mobile systems, encryption systems, etc. Some of them could be AI-systems.

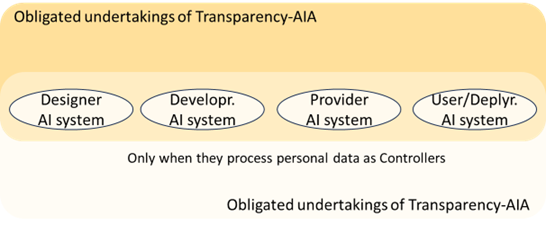

Transparency-AIA obliges to designers, developers, providers and users/deployers of AI-systems. Transparency-GDPR obliges to personal data processing controllers. Designers and developers could be controllers or processors if they use personal data in the design or developing of the AI-system. Providers could be controllers or processors if the AI-systems store or deal with data of identified or identifiable data subjects. Users/deployers of an AI-system could be controllers or processors if they include such system in a personal data processing activity. All the ones that act as controllers should fulfil the Transparency-GDPR obligations, but they are not obliged if they aren’t controllers.

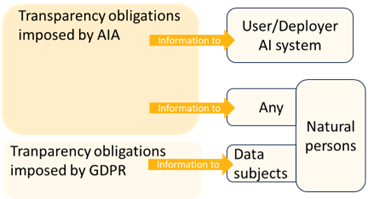

The transparency-AIA obliges to give information to users/deployers of AI systems, and to affected natural persons, or group of persons, by an AI system. These natural persons could be affected even when they are no data subjects (as defined in GDPR), i.e. in the case the natural persons are recipients of media content created by AI-system.

Transparency-GDPR lays down obligations for controllers to inform to data subjects in case of personal data processing activities, that including automated decision-making, and profiling. Regarding automatic decisions, not all of them are implemented by AI systems, and, in the other hand, not all AI systems implemented in personal data processing activities would be related with automated decision or profiling according to Article 22(1) and (4) of GDPR.

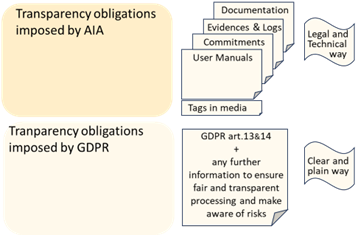

Finally, the kind of information that must be delivered in the framework of Transparency-AIA or Transparency-GDPR are different. Different senders, different recipients and different purposes mean different amount and kind of information to be delivered. Anyway, the information to deliver is laid down by the regulation.

GDPR stated the minimum data to be delivered in Articles 13 and 14, and Recitals 39, and 58 to 62 explain that the information delivered should fulfil the following objectives: make the natural persons “aware of risks”, “existence of profiling and the consequences of such profiling”, “controllers”, “purposes”, “rights”, “safeguards” and “any further information necessary to ensure fair and transparent processing taking into account the specific circumstances and context in which the personal data are processed”, in an “easy to understand” way, using “clear and plain language”, and when the existence of automated decision-making, including profiling, about meaningful information of the logic involved, as well as the significance and the envisaged consequences, at least.

Transparency-AIA information delivered to users/deployers is related to the terms explainability (Article 13, Article 4a of the Parliament version and Recital 38 of the AIA), documentation, record-keeping and provision of information about how to use such AI-system (Recital 43 of the AIA). It should allow users/deployers-AIA to fulfil their compliance duties. Transparency-AIA for natural persons is pointed out in Article 52(1) of the proposed AIA, and it is related to the duty to notice the natural persons that they are interacting with an AI system.

In conclusion, Transparency-GDPR and Transparency-AIA have different meanings, lay down duties for different actors, refer to different kind of information both in content and wording, and intended for different recipients. Therefore, delivering the same Transparency-AIA information intended to users/deployers but to data subjects would not fulfil the Transparency-GDPR duties.

When the user/deployer has the role of controller or processor, it is obliged to fulfil the GDPR accountability principle. It would be impossible to do so if the systems used to implement the processing activity are not accountable. If the means of the processing, i.e., AI-systems, Cloud-systems, Mobile-systems, Comm. systems and others, are not properly documented and don’t give the evidence about the needed performance, privacy and security requirements, the controller shouldn’t use them. Therefore, implicitly, the information available in the framework of Transparency-AIA should be good enough to allow controllers and processors to fulfill their different GDPR obligations.

Finally, we shouldn’t mix up transparency obligations with the investigative powers of the Supervisory Authorities, the assessment of the certification bodies or the monitoring activities of the code of conduct bodies. The information requested and delivered to them should be complete and deep enough to fulfill their powers, and that information is far beyond the one to fulfil the Transparency-GDPR obligations.

This post is related with other material released by the AEPD’s Innovation and Technology Division, such us:

• Post Artificial Intelligence: accuracy principle in the processing activity

• Post AI: System vs Processing, Means vs Purposes

• Post Federated Learning: Artificial Intelligence without compromising privacy

• GDPR compliance of processing that embed Artificial Intelligence. An introduction

• Audit Requirements for Personal Data Processing Activities involving AI

• 10 Misunderstandings about Machine Learning

• Reference map of personal data processing that embed artificial intelligence